Who knows what we're doing? | Real teams doing AI

AI software development is exactly like any other software development, and completely different at the same time. AI is the process that blurs the boundary between data and software. The data becomes the algorithm. It's the ultimate implementation of the vision of Alan Turing's universal machine.

Photo by Egor Myznik on Unsplash

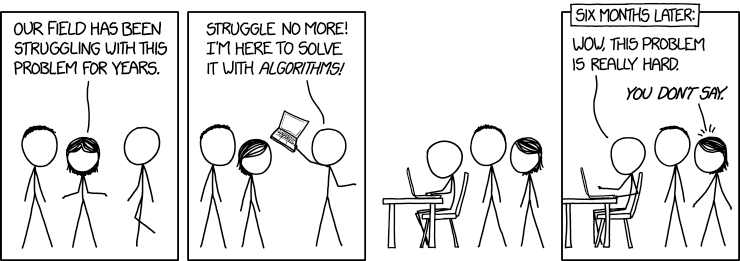

In my early AI initiatives, I often found myself frustrated because I felt that many team members just didn't get what we were trying to do.

This was quite discouraging. I couldn't understand why people didn't get it. I was used to being well understood by teams, to communicate clearly and precisely so that people knew what they had to do. Why wasn't it working on these types of projects, even when I could tell that the people in the project were all smart and willing?

I eventually realized that it boiled down, like most problems with teams' performance issues, to a set of flawed assumptions, which generated a disconnect in expectations. Here's how I broke them down:

- Me: I was assuming that, by explaining in detail what customer research had uncovered and having POs translate that into product and data features, each team member would apply their expertise in the self-organized Agile team and run with the goal to figure out their piece of it, like in my dreams.

- The data processing team: they were expecting to receive data to process and transform according to specs, annotators to integrate, data fields to index, but that their job was not to care much about the meaning of the data - after all, we have AI people and subject matter experts for that, and they know what they're doing.

- The subject matter experts: they assumed that since the AI team and the business seemed to know where this was going, and that there is a data processing team, they would receive a clean dataset and clear instructions for what to do to prepare it - after all, this is an AI project, and the AI and data experts must know what they expect from us.

- The AI team: they were assuming that if the business was able to clearly state in detail what will happen in the product features, then the business experts had already figured out the information part and the data processing team would take care of delivering the right data to the right fields - after all the data is where the expertise is. Our job is to find the most efficient way to compute training data to make the most accurate predictions.

Note that it's everybody's expectation that someone else actually understands and deals with the difficult data problem. It took me a a long time before I could clearly identify this circle of implicit mismatched expectations, but until I did, it remained a hidden obstacle to velocity and a source of frustration.

As we've discussed here before, the most important and difficult part of an AI initiative is to actually understand in detail what needs to be done to what data.

Yet, in many non-tech-core businesses, development is culturally a technical activity done on spec and about building platforms and UIs. Intellectual Property, i.e. valuable information and data content, is dealt with in "the business". Yet, "the business" people in such organizations expect to provide UI sketches and rote functional descriptions to development, who will sort out the implementation details. That's the legacy model.

But AI is where information and data content is no longer just something to be formatted and displayed. In a very real way, the information content becomes a functional part of the software. This creates a requirements gap between information curators and feature developers.

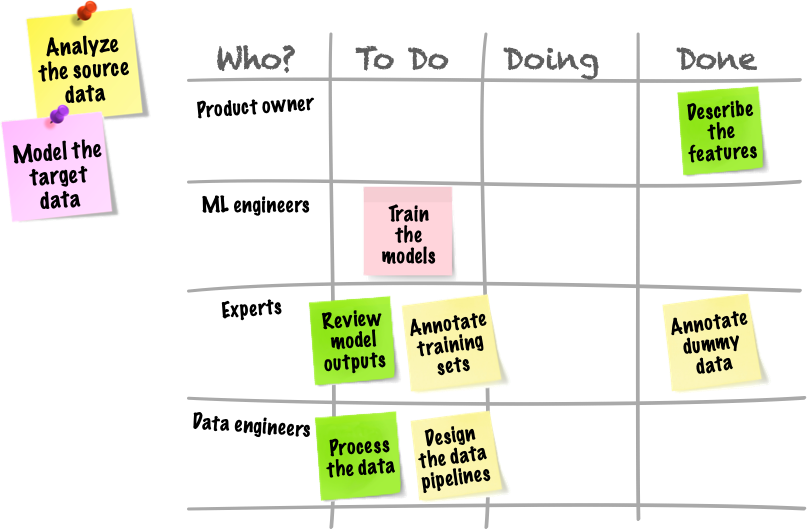

The traditional division of intellectual labor in creating specifications has become blurred. AI projects therefore require activities and roles in the team that didn't exist before. The solution to this problem turns out to be to add data analysis as a core project activity.

Duh.

Analyzing and fine-tuning how the data model matches the actual raw data is a bona fide special task that, in most business units, nobody has done before, nor can anyone assume that somebody else has it figured out. Just as when dev teams develop features it's everybody's job to do their part in making sure that it's working properly, when data algorithms need to be specified, it's also everybody's job to make sure that it makes sense.

What is the job of this data analysis role?

What data is there to be analyzed and what questions is the analysis expected to answer?

As far as source and origin go, for the purposes of the distinction that I want to make here, there's two kinds of data:

- User-generated data: the data is created by your user's activity. It goes from the history of their activity, the links they clicked and so on, to their notes and folder names with the items they selected (maybe their cart or the folders with saved items), it's the text that they typed (either notes they took, or queries they wrote, messages and comments they left), but it can also be personal data, financial data, etc., or even product data if you're a marketplace and users are also putting products on your platform.

- Content IP data: this is information and data produced by subject matter experts. They are journalists, analysts, editors, scientists, lawyers, engineers and all kinds of experts. They can be from your company or this can be open or public data. The key is that it's data that is created with the intent of imparting, collecting or evaluating information in some way, that has an expert or authoritative bent.

No doubt one could identify additional categories of data, but from the point of view of the data analysis job, the distinction I want to make is this: I have experienced a completely different kind of team dynamic in cases where we had trace or user data to understand, as opposed to cases where the data is curated information produced by experts.

In the case of user generated data, it turns out to be quite natural for the team to organize around analyzing it. Nobody can make a credible claim of already knowing everything about the user, everybody is curious to discover what the hell the user is doing, tries to discover patterns of behavior in these people that they take as an object of study. Most people in such cases can also identify with the user, they can put themselves in their shoes.

In the case where expert data is the object, on the other hand, and especially where some of the experts are on the team, then it takes some effort to overcome the terrible duo of assumptions that a) we already understand everything there is to understand about this data, because we are the experts in it and b) the expertise that we have about that data is ancestral, deep, unique and there's nothing a machine or a non-expert can do that will discover anything that we don't already know.

Of course unchecked assumptions are always a bane, but when your object of analysis is information that some of your teammates have been curating forever, then the level of unchecked assumptions is off the charts. Not only is it the case that experts tend to think they already know and their cognitive and confirmation biases typically get in the way of getting new insights about their object of expertise, but this is compounded by the fact that other team members reinforce this: they don't themselves understand the subject matter of the data and will also assume that the experts already know all there is to know, and don't feel legitimate in questioning it.

When you setup your AI teams, make sure that the team documents how they will establish the sources of truth, how this truth will be challenged and enriched by other angles of analysis, and who's accountable to whom for understanding what. In other words, make sure that nobody assumes that other team members are responsible for understanding what's really going on with the data and knowledge from end to end. Everybody needs to understand all of it at least to a level where they can explain it to their grandparents.

Comments