Let's solve a problem with AI! | Real teams doing AI

How do small teams in a regular business try to get value out of AI? Let's take a break from marveling at protein folding, AlphaZero and dancing robots. Let's talk about normal people trying to do AI. This is the first in a series of about 5 posts on getting started with AI projects.

Photo by Phillip Glickman on Unsplash

Like every other business on the planet, your company has been communicating about tech trends, how AI will disrupt business as we know it, and you've been following and sharing the tech newsletters, articles and webinars. You've asked your teams and other units to embrace this, of course, because you're a thought leader. You've put in place an innovation pipeline and everything. Now you're looking to sign-off on your first AI initiative, because maybe you have "Launch innovation projects" as one of your goals.

So someone comes to you and says:

"I have an idea, we should use AI to do this thing."

Great, people are catching on, digital transformation is happening from the ground up!

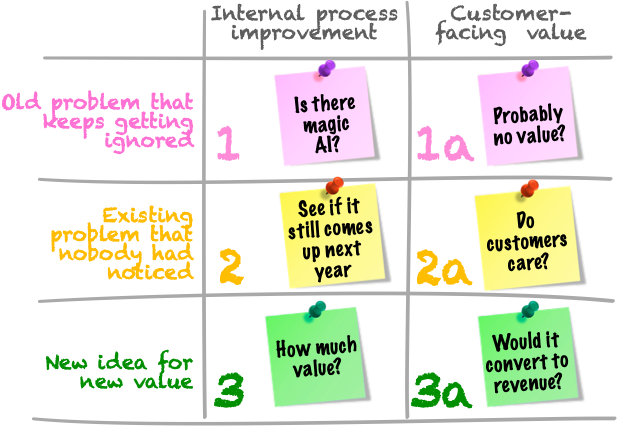

Without prejudice to your innovation pipeline criteria, either of three things apply:

- the thing in question is a problem that the company has had for a decade and that keeps coming up regularly at project or innovation meetings but never gets funded;

- the thing in question is a completely new problem that nobody had noticed before;

- the thing in question is a new idea that some other new thing (new data, knowledge or insights, for example) is now making possible.

Also, and this is a key distinction, either of the following is true:

- the thing is focused on an internal business process that needs to be improved;

- the thing is about customer-facing value.

No doubt I will do a dedicated post one day about this particular landscape, but for now, let's just frame these 6 cases, and how we should think about them:

Setup the team

Now that an opportunity is identified, your immediate problem is that you don't work at a GAFANAMBATX+ or a VC-backed AI startup. This puts you in a tough spot: you don't have a team who knows how to do AI projects.

No, you don't.

"If you think you know how to do AI, then you don't know how to do AI" — Frenchman Diary (apocryphal)

What you likely have on the other hand, are teams of developers scattered around your organization, maybe also a BI team, some of whom might have done some AI work before. They also keep up to date with the state of the art literature in their field, so it's not like they don't know what you're talking about, and they're interested in doing something new, just like you.

Still, for the past few years, they have mostly delivered database front-ends, ETLs and MouseFlow integrations.

Now you have to get someone to assemble a project team.

I'll deal in another post with AI project team composition. For now, let's jump to: you found a way to fund the initiative (also a separate topic for a later post), you've got your program director, a group of developers, some time from the UX team, some experts from the product operations, some time from a product manager, a marketing SPOC and some back office contacts. Maybe you have external people from an AI vendor, too. Do you also have an AI Center of Excellence in your enterprise? They might show up.

You've done your job. Now, team, time to deliver!

This post is about what starts to happen after this point. I know, the intro is half the post.

Where's the data?

At the PI 0 planning (which boomers will recognize as a kickoff meeting), once the 180 minutes of slides outlining the plan, the insights from customers, the feature ideas, the AI quotes and inspiring motivational memes, the UX principles, the tentative revenue projections, the team org chart, the Kanban/Scrum cycles and the Agile delivery workflow presentations are over, the newly minted AI team will look at the rest of the group and ask:

"OK, so where's the training data?"

And this will turn out to be the only question that matters for the next few months.

The reason for this is that it's a question that has multiple answers and packs a large number of assumptions and expectations. It will take a long time to untangle what is behind this question, so grab some popcorn and keep scrolling. This will be a series of several posts, I'm not sure how many yet.

Let's deconstruct the layers of implicit assumptions and ambiguities behind this question:

- "where is" - there is actually data somewhere that is available for this project?

- "the training" - oh, so we're doing Machine Learning?

- "data" - does the data that we have support the features that we want to build?

Now let's take each one of these items and break it down. We'll do the first one in this post and the rest will follow in subsequent posts.

Do we know where the data is?

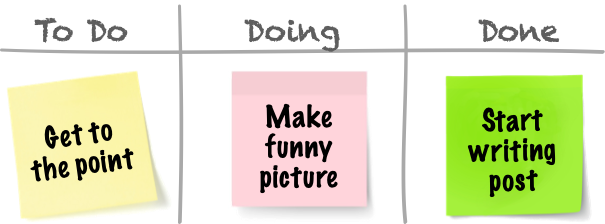

I'm a huge Agile guy. I drank the Agile Kool-Aid in my baby bottle. So I'm always tempted to go back to basics and have the PO just state the feature goal, and let the Agile team start the conversation to flesh out the details of how to accomplish the goal.

In my dreams I see foundation calls where the PO simply states "As a procrastinating user of the product, I want to know if it's going to snow tomorrow so that I can plan to call in sick", and waits for the unerringly insightful and creative questions from the team as they build their user story backlog.

Now, if you're working for the national weather service, a user story like this might be just another boring day at work for the dev team. If you're not the weather service, though, then it's not an AI initiative for you, because it's not reasonable to develop a weather forecasting model in your company. To develop that feature, devs will instead do 20 minutes of research to see if the national weather service has an API for that that they can use (they'll still size the story at 8 points, though, because, you know). Remember that's always an option.

What I'm trying to say is that, in order to launch an AI initiative, I want to make sure that people have a good sense of what data we already have access to that we can use to create the value that we want.

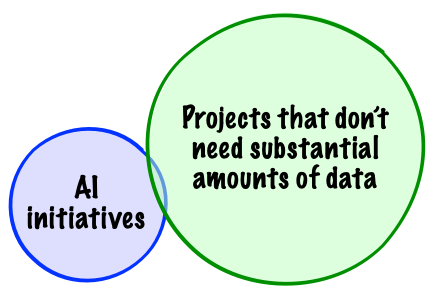

There is a very large number of different types of things that one can do with "AI". Very few of them, however, don't require substantial amounts of data.

The reason I bring up Agile here is to highlight the inherent tension between the canonical Agile dreamworld that I allude to above, which is a reasonable utopia to strive for in many circumstances, and projects involving a lot data.

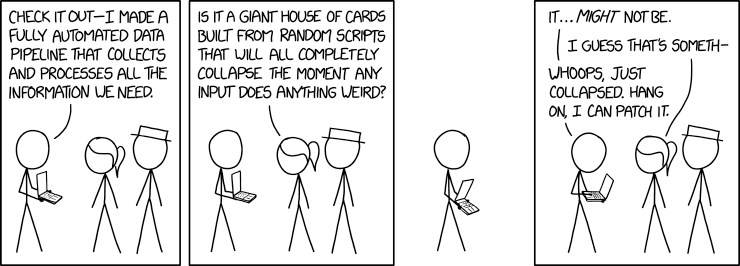

Even for systems where you have large teams in charge of every piece of data, such as banking or insurance systems and such, the data question is not obvious. Any process involving even well-governed data pipelines, transformation and extraction, is fraught with issues around data integrity, legacy standards, data semantics, interoperability, system complexity and predictability, and so on.

The picture is more blurry in the case of projects where data is the unstructured prime matter for some other value that the software has to create, where the value comes from the recursive admixture and interplay of software and data. Meaning where data is not just something to be transported and properly displayed, but has to be interpreted by algorithms. This is really what I call "AI" projects.

I say "blurry" because it's not necessarily more complex, but it's different and less predictable. The reason for this is that, depending on the type of algorithm that you will be using, and, most importantly, what intelligence you want to get from the data, it may be OK for you to live with dirty data.

I will do deeper dives into data-intensive LEAN/Agile projects, and the question of data in AI algorithms, in later posts. For now, let's observe that we can't just start from a feature story and expect the team to discover and figure out what to do with the data one sprint at a time. Somebody, or a team, has to own the data problem, which is a lot like the system architecture problem, only more complicated. In the next couple of posts, we'll get into why that is.

The first question you should ask when someone has an AI idea, is whether there is a reasonably detailed idea and description of what data is available to your company that either contains the intelligence you're looking for, or that you can add value to, and who owns it.

Are we doing Machine Learning?

OK, so they have data now, devs know how to get it and update it, and everybody agrees that it probably contains information they need. Now the question is: what are they going to do with this data?

This is what we'll start getting into in the next post. If you haven't already, subscribe below to receive it in your inbox next week and/or to leave comments or questions on this blog.

Comments